ideas

According to what some call the strong definition of free will, articulated by René Descartes in the 17th century, you are free if, under identical circumstances, you could have acted otherwise. Identical circumstances refer to not only the same external conditions but also the same brain states. (…)

Contrast this strong notion of freedom with a more pragmatic conception called compatibilism, the dominant view in biological, psychological, legal and medical circles. You are free if you can follow your own desires and preferences. A long-term smoker who wants to quit but who lights up again and again is not free. His desire is thwarted by his addiction. Under this definition, few of us are completely free. (…)

Consider an experiment that ends with a 90 percent chance of an electron being here and a 10 percent chance of it being over there. If the experiment were repeated 1,000 times, on about 900 trials, give or take a few, the electron would be here; otherwise, it would be over there. Yet this statistical outcome does not ordain where the electron will be on the next trial. Albert Einstein could never reconcile himself to this random aspect of nature. (…)

This experiment was conceived and carried out in the early 1980s by Benjamin Libet, a neuropsychologist at the University of California, San Francisco. (…)

Intuitively, the sequence of events that leads to a voluntary act must be as follows: You decide to raise your hand; your brain communicates that intention to the neurons responsible for planning and executing hand movements; and those neurons relay the appropriate commands to the motor neurons that contract the arm muscles. But Libet was not convinced. Wasn’t it more likely that the mind and the brain acted simultaneously or even that the brain acted before the mind did?

Libet set out to determine the timing of a mental event, a person’s deliberate decision, and to compare that with the timing of a physical event, the onset of the readiness potential after that decision. (…)

The results told an unambiguous story, which was bolstered by later experiments. The beginning of the readiness potential precedes the conscious decision to move by at least half a second and often by much longer. The brain acts before the mind decides! This discovery was a complete reversal of the deeply held intuition of mental causation.

{ Scientific American | Continue reading }

photo { Tabitha Soren }

ideas, neurosciences, photogs | April 13th, 2012 5:00 am

New scientific research raises the possibility that advanced versions of T. rex and other dinosaurs — monstrous creatures with the intelligence and cunning of humans — may be the life forms that evolved on other planets in the universe. “We would be better off not meeting them,” concludes the study, which appears in the Journal of the American Chemical Society. (…)

“An implication from this work is that elsewhere in the universe there could be life forms based on D-amino acids and L-sugars. Such life forms could well be advanced versions of dinosaurs, if mammals did not have the good fortune to have the dinosaurs wiped out by an asteroidal collision, as on Earth.”

{ ACS | Continue reading }

science, theory | April 12th, 2012 7:44 am

Natural selection never favors excess; if a lower-cost solution is present, it is selected for. Intelligence is a hugely costly trait. The human brain is responsible for 25 per cent of total glucose use, 20 per cent of oxygen use and 15 per cent of our total cardiac output, although making up only 2 per cent of our total body weight. Explaining the evolution of such a costly trait has been a long-standing goal in evolutionary biology, leading to a rich array of explanatory hypotheses, ranging from evasion of predators to intelligence acting as an adaptation for the evolution of culture. Among the proposed explanations, arguably the most influential has been the “social intelligence hypothesis,” which posits that it is the varied demands of social interactions that have led to advanced intelligence.

{ Proceedings of The Royal Society B | Continue reading }

brain, ideas, science | April 11th, 2012 11:36 am

Physicist: Alright, the Earth has only one mechanism for releasing heat to space, and that’s via (infrared) radiation. We understand the phenomenon perfectly well, and can predict the surface temperature of the planet as a function of how much energy the human race produces. The upshot is that at a 2.3% growth rate (conveniently chosen to represent a 10× increase every century), we would reach boiling temperature in about 400 years. And this statement is independent of technology. Even if we don’t have a name for the energy source yet, as long as it obeys thermodynamics, we cook ourselves with perpetual energy increase. (…)

Economist: Consider virtualization. Imagine that in the future, we could all own virtual mansions and have our every need satisfied: all by stimulative neurological trickery. We would stil need nutrition, but the energy required to experience a high-energy lifestyle would be relatively minor. This is an example of enabling technology that obviates the need to engage in energy-intensive activities. Want to spend the weekend in Paris? You can do it without getting out of your chair.

Physicist: I see. But this is still a finite expenditure of energy per person. Not only does it take energy to feed the person (today at a rate of 10 kilocalories of energy input per kilocalorie eaten, no less), but the virtual environment probably also requires a supercomputer—by today’s standards—for every virtual voyager. The supercomputer at UCSD consumes something like 5 MW of power. Granted, we can expect improvement on this end, but today’s supercomputer eats 50,000 times as much as a person does, so there is a big gulf to cross.

{ Do the Math | Continue reading }

economics, ideas, technology | April 11th, 2012 9:32 am

One by one, pillars of classical logic have fallen by the wayside as science progressed in the 20th century, from Einstein’s realization that measurements of space and time were not absolute but observer-dependent, to quantum mechanics, which not only put fundamental limits on what we can empirically know but also demonstrated that elementary particles and the atoms they form are doing a million seemingly impossible things at once. (…)

Eighty-seven years ago, as far as we knew, the universe consisted of a single galaxy, our Milky Way, surrounded by an eternal, static, empty void. Now we know that there are more than 100 billion galaxies in the observable universe. (…)

Combining the ideas of general relativity and quantum mechanics, we can understand how it is possible that the entire universe, matter, radiation and even space itself could arise spontaneously out of nothing, without explicit divine intervention. (…)

Perhaps most remarkable of all, not only is it now plausible, in a scientific sense, that our universe came from nothing, if we ask what properties a universe created from nothing would have, it appears that these properties resemble precisely the universe we live in.

{ Lawrence M. Krauss/LA Times | Continue reading }

artwork { Ellsworth Kelly, White curve I (black curve I), 1973 }

ideas, science, space | April 9th, 2012 3:33 pm

The New Aesthetic reeks of power relations. Drones, surveillance, media, networks, digital photography, algorithms. (…)

The ability to watch someone is a form of power. It controls the flow of information. “I know everything about you, but you know nothing about me.” Or, “I know everything about you, and all you can do is make art about the means by which I know things.” (…)

Someone is always watching. Someone has always been watching. If you’re a woman, you’ve probably known that your whole life.

{ Madeline Ashby | Continue reading | via Marginal Utility }

ideas, technology | April 7th, 2012 4:03 pm

I have heard that higher IQ people tend to have less children in modern times than lower IQ people. And if larger family size makes the offspring less capable, than we are pioneering interesting times.

{ comment on marginalrevolution.com }

photo { Augustin Rebetez }

ideas, kids, photogs | April 6th, 2012 9:21 am

We show that the number of gods in a universe must equal the Euler characteristics of its underlying manifold. By incorporating the classical cosmological argument for creation, this result builds a bridge between theology and physics and makes theism a testable hypothesis. Theological implications are profound since the theorem gives us new insights in the topological structure of heavens and hells. Recent astronomical observations can not reject theism, but data are slightly in favor of atheism.

{ Daniel Schoch, Gods as Topological Invariants | arXiv | Continue reading }

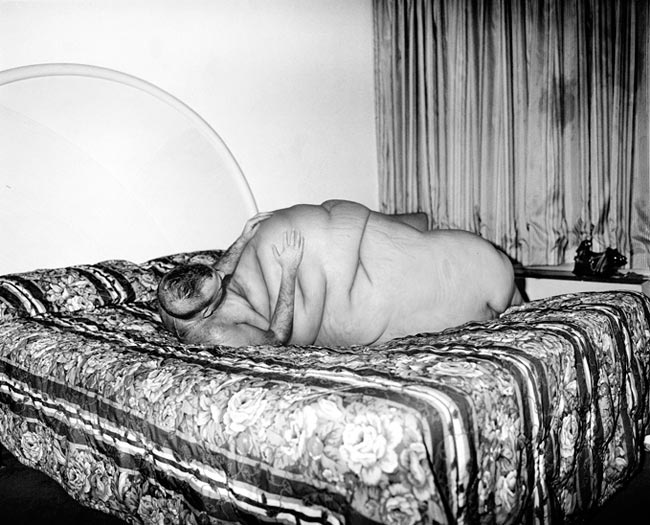

photo { Adam Bartos }

ideas, photogs | April 5th, 2012 3:34 pm

ideas, photogs | April 5th, 2012 1:00 pm

Six studies demonstrate the “pot calling the kettle black” phenomenon whereby people are guilty of the very fault they identify in others. Recalling an undeniable ethical failure, people experience ethical dissonance between their moral values and their behavioral misconduct. Our findings indicate that to reduce ethical dissonance, individuals use a double-distancing mechanism. Using an overcompensating ethical code, they judge others more harshly and present themselves as more virtuous and ethical.

{ Journal of Experimental Psychology: General | PDF | via Overcoming Bias }

related { Psychological projection is a psychological defense mechanism where a person subconsciously denies his or her own attributes, thoughts, and emotions, which are then ascribed to the outside world, usually to other people. | Wikipedia }

ideas, psychology, shit talkers | April 3rd, 2012 12:12 pm

Computer scientists have analysed thousands of memorable movie quotes to work out why we remember certain phrases and not others. (…)

They then asked individuals who had not seen the films to guess which of the two lines was the memorable one. On average, people chose correctly about 75 per cent of the time, confirming the idea that the memorable features are inherent in the lines themselves and not the result of some other factor, such as the length of the lines or their location in the film. (…)

They then compared the memorable phrases with a standard corpus of common language phrases taken from 1967, making it unlikely to contain phrases from modern films. (…)

The phrases themselves turn out to be significantly distinctive, meaning they’re made up of combinations of words that are unlikely to appear in the corpus. By contrast, memorable phrases tend to use very ordinary grammatical structures that are highly likely to turn up in the corpus.

{ The Physics arXiv Blog | Continue reading }

Linguistics, showbiz | April 3rd, 2012 12:01 pm

Computers dominate how we live, work and think. For some, the technology is a boon and promises even better things to come. But others warn that there could be bizarre consequences and that humans may be on the losing end of progress. (…)

“Economic progress ultimately signifies the ability to produce things at a lower financial cost and with less labor than in the past,” says Polish sociologist Zygmunt Bauman. As a result, he says, increasing effectiveness goes hand in hand with rising unemployment, and the unemployed merely become “human waste.”

Likewise, (…) Erik Brynjolfsson and Andrew McAfee, both scholars at the MIT, argue that, for the first time in its history, technological progress is creating more jobs for computers than for people.

{ Spiegel | Continue reading }

unrelated { Competition among memes in a world with limited attention }

economics, ideas, technology, uh oh | April 3rd, 2012 7:25 am

Abracadabra is an incantation used as a magic word in stage magic tricks, and historically was believed to have healing powers when inscribed on an amulet.

The word is thought to have its origin in the Aramaic language, in which ibra (אברא) means “I have created” and k’dibra (כדברא) means “through my speech”, providing a translation of abracadabra as “created as I say.”

{ Wikipedia | Continue reading }

Linguistics | April 2nd, 2012 5:00 am

“Extraordinary claims require extraordinary evidence” was a phrase made popular by Carl Sagan who reworded Laplace’s principle, which says that “the weight of evidence for an extraordinary claim must be proportioned to its strangeness.” This statement is at the heart of the scientific method, and a model for critical thinking, rational thought and skepticism everywhere. However, no quantitative standards have been agreed upon in order to define whether or not extraordinary evidence has been obtained. Consequently, the measures of “extraordinary evidence” are completely reliant on subjective evaluation and the acceptance of “extraordinary claims.” In science, the definition of extraordinary evidence is more a social agreement than an objective evaluation, even if most scientists would state the contrary.

{ SSRN | Continue reading }

photo { Nathaniel Ward }

ideas, science | April 1st, 2012 3:05 pm

Does it seem plausible that education serves (in whole or part) as a signal of ability rather than simply a means to enhance productivity? (…)

Many MIT students will be hired by consulting firms that have no use for any of these skills. Why do these consulting firms recruit at MIT, not at Hampshire College, which produces many students with no engineering or computer science skills (let alone, knowledge of signaling models)?

Why did you choose MIT over your state university that probably costs one-third as much?

{ David Autor/MIT | PDF }

photo { Robert Frank }

economics, ideas, kids | March 30th, 2012 1:54 pm

IQ, whatever its flaws, appears to be a general factor, that is, if you do well on one kind of IQ test you will tend to do well on another, quite different, kind of IQ test. IQ also correlates well with many and varied real world outcomes. But what about creativity? Is creativity general like IQ? Or is creativity more like expertise; a person can be an expert in one field, for example, but not in another. (…)

The fact that creativity can be stimulated by drugs and travel also suggests to me a general aspect. No one ever says, if you want to master calculus take a trip but this does work if you are blocked on some types of creative projects.

{ Marginal Revolution | Continue reading }

illustration { Shag }

ideas, psychology | March 28th, 2012 12:19 pm

We are still living under the reign of logic: this, of course, is what I have been driving at. But in this day and age logical methods are applicable only to solving problems of secondary interest. The absolute rationalism that is still in vogue allows us to consider only facts relating directly to our experience. Logical ends, on the contrary, escape us. It is pointless to add that experience itself has found itself increasingly circumscribed. It paces back and forth in a cage from which it is more and more difficult to make it emerge. It too leans for support on what is most immediately expedient, and it is protected by the sentinels of common sense. Under the pretense of civilization and progress, we have managed to banish from the mind everything that may rightly or wrongly be termed superstition, or fancy; forbidden is any kind of search for truth which is not in conformance with accepted practices. It was, apparently, by pure chance that a part of our mental world which we pretended not to be concerned with any longer — and, in my opinion by far the most important part — has been brought back to light. For this we must give thanks to the discoveries of Sigmund Freud. On the basis of these discoveries a current of opinion is finally forming by means of which the human explorer will be able to carry his investigation much further, authorized as he will henceforth be not to confine himself solely to the most summary realities.

{ André Breton, Manifesto of Surrealism, 1924 | Continue reading }

Surrealism had the longest tenure of any avant-garde movement, and its members were arguably the most “political.” It emerged on the heels of World War I, when André Breton founded his first journal, Literature, and brought together a number of figures who had mostly come to know each other during the war years. They included Louis Aragon, Marc Chagall, Marcel Duchamp, Paul Eluard, Max Ernst, René Magritte, Francis Picabia, Pablo Picasso, Phillippe Soupault, Yves Tanguey, and Tristan Tzara. Some were “absolute” surrealists and others were merely associated with the movement, which lasted into the 1950s. (…)

André Breton was its leading light, and he offered what might be termed the master narrative of the movement.

No other modernist trend had a theorist as intellectually sophisticated or an organizer quite as talented as Breton. No other was [as] international in its reach and as total in its confrontation with reality. No other [fused] psychoanalysis and proletarian revolution. No other was so blatant in its embrace of free association and “automatic writing.” No other would so use the audience to complete the work of art. There was no looking back to the past, as with the expressionists, and little of the macho rhetoric of the futurists. Surrealists prized individualism and rebellion—and no other movement would prove so commercially successful in promoting its luminaries. The surrealists wanted to change the world, and they did. At the same time, however, the world changed them. The question is whether their aesthetic outlook and cultural production were decisive in shaping their political worldview—or whether, beyond the inflated philosophical claims and ongoing esoteric qualifications, the connection between them is more indirect and elusive.

Surrealism was fueled by a romantic impulse. It emphasized the new against the dictates of tradition, the intensity of lived experience against passive contemplation, subjectivity against the consensually real, and the imagination against the instrumentally rational. Solidarity was understood as an inner bond with the oppressed.

{ Logos | Continue reading }

flashback, ideas, poetry | March 28th, 2012 6:12 am

People often compare education to exercise. If exercise builds physical muscles, then education builds “mental muscles.” If you take the analogy seriously, however, then you’d expect education to share both the virtues and the limitations of exercise. Most obviously: The benefits of exercise are fleeting. If you stop exercising, the payoff quickly evaporates. (…) Exercise physiologists call this detraining. As usual, there’s a big academic literature on it.

{ EconLib | Continue reading }

health, ideas, sport | March 27th, 2012 3:58 pm

“Eskimo has one hundred words for snow.” The Great Eskimo Vocabulary Hoax [PDF | Wikipedia] was demolished many years ago. (…)

People who proffer the factoid seem to think it shows that the lexical resources of a language reflect the environment in which its native speakers live. As an observation about language in general, it’s a fair point to make. Languages tend to have the words their users need and not to have words for things never used or encountered. But the Eskimo story actually says more than that. It tells us that a language and a culture are so closely bound together as to be one and the same thing. ”Eskimo language” and the “snowbound world of the Eskimos” are mutually dependent things. That’s a very different proposition, and it lies at the heart of arguments about the translatability of different tongues.

Explorer-linguists observed quite correctly that the languages of peoples living in what were for them exotic locales had lots of words for exotic things, and supplied subtle distinctions among many different kinds of animals, plants, tools, and ritual objects. Accounts of so-called primitive languages generally consisted of word lists elicited from interpreters or from sessions of pointing and asking for names. But the languages of these remote cultures seemed deficient in words for “time,” “past,” “future,” “language,” “law,” “state,” “government,” “navy,” or “God.”

More particularly, the difficulty of expressing “abstract thought” of the Western kind in many Native American and African languages suggested that the capacity for abstraction was the key to the progress of the human mind… The “concrete languages” of the non-Western world were not just the reflection of the lower degree of civilization of the peoples who spoke them but the root cause of their backward state. By the dawn of the twentieth century, “too many concrete nouns” and “not enough abstractions” became the conventional qualities of “primitive” tongues.

That’s what people actually mean when they repeat the story about Eskimo words for snow. (…)

If you go into a Starbucks and ask for “coffee,” the barista most likely will give you a blank stare. To him the word means absolutely nothing. There are at least thirty-seven words for coffee in my local dialect of Coffeeshop Talk.

{ David Bellos/Big Think | Continue reading }

Linguistics | March 27th, 2012 12:56 pm

We need to understand why we often have trouble agreeing on what is true (what some have labeled science denialism). Social science has taught us that human cognition is innately, and inescapably, a process of interpreting the hard data about our world – its sights and sound and smells and facts and ideas - through subjective affective filters that help us turn those facts into the judgments and choices and behaviors that help us survive. The brain’s imperative, after all, is not to reason. Its job is survival, and subjective cognitive biases and instincts have developed to help us make sense of information in the pursuit of safety, not so that we might come to know “THE universal absolute truth.” This subjective cognition is built-in, subconscious, beyond free will, and unavoidably leads to different interpretations of the same facts. (…)

Our subjective system of cognition can be dangerous. It can produce perceptions that conflict with the evidence, what I call The Perception Gap.

{ Big Think | Continue reading }

ideas, psychology | March 27th, 2012 7:54 am